Modern software systems are incredibly complex. They’re spread across massive networks with countless moving parts. Because of this complexity, unexpected failures are inevitable. Servers crash. Networks slow down. Dependencies fail. Instead of waiting for something to break at 3 AM and scrambling to fix it, there’s a smarter approach: find and fix weaknesses before they cause real problems. That’s what Chaos Engineering is all about. As Dr. Werner Vogels, Amazon’s CTO, famously said, “Everything fails, all the time.” This simple truth is the foundation of an entire field dedicated to preparing systems for inevitable failures.

Chaos Engineering is essentially a way to test how strong your system is by intentionally introducing faults. The goal? Find weak spots and fix them before they lead to real outages. It’s not about creating chaos for chaos’s sake. It’s a careful, scientific method that uses controlled experiments to identify and prevent problems before they happen. This approach fundamentally changes how companies think about system reliability. The old way was reactive: wait for something to fail, then fix it. That’s expensive in terms of downtime, reputation damage, and wasted resources. Chaos Engineering flips this around. It’s proactive. You’re preventing failures instead of just recovering from them. This shift represents a more mature engineering culture where prevention is valued over firefighting.

What Exactly is Chaos Engineering?

Chaos Engineering helps you understand how failures happen in complex systems and gives you practical ways to prevent or reduce them. At its core, it’s about running controlled experiments to uncover hidden weaknesses. Think of it like a vaccination for your software: you’re introducing a small, controlled problem to build immunity against bigger, real-world disasters.

Here’s the key difference between Chaos Engineering and traditional testing. Traditional testing verifies that your system works as expected. It’s checking known features and confirming requirements. You’re testing what you know. Chaos Engineering, on the other hand, looks for unknown weaknesses before they become problems. It’s proactive, not reactive.

This shift matters more than you might think. Modern systems run in unpredictable environments with countless variables. Relying only on traditional testing leaves blind spots. You won’t know how robust your system really is until it’s under real pressure. By intentionally introducing controlled failures, you’ll discover which parts of your system are solid and which need work.

This organized approach helps companies build stronger products, which directly impacts their bottom line and customer satisfaction. But there’s more to it than just technical benefits. When systems aren’t resilient enough, the hidden costs add up fast. You’re not just losing money during outages. You’re also slowly losing customer trust, burning out your operations team with constant incident response, exhausting developers who are always firefighting, and missing opportunities for innovation because your resources are tied up maintaining basic stability. Chaos Engineering tackles these problems early, creating a more sustainable and productive engineering environment.

Why is System Resiliency So Crucial Today?

When we talk about resiliency in software, we’re talking about your system’s ability to keep working even when things go wrong. It might be running in a degraded state, but it’s still keeping core functions alive. More importantly, it’s about how quickly you can get back to normal operations. Real resilience means you can anticipate problems, absorb the impact, adapt to changes, and recover quickly from whatever the environment throws at you.

In today’s hyper-connected world, even brief outages have massive consequences. Think about e-commerce during Black Friday, banking systems processing millions of transactions, or healthcare systems managing patient data. When these systems fail, the damage is immediate and severe. You’re looking at revenue losses, brand damage, frustrated customers, and potential regulatory penalties. The Digital Operational Resilience Act (DORA), for example, requires regular resiliency testing to identify weaknesses. This regulatory pressure isn’t going away. Building resilient systems isn’t optional anymore. It’s essential for protecting your business, your customers, and your reputation.

The strategic importance of resilience goes way beyond technical features. It’s become a competitive differentiator and, increasingly, a regulatory requirement. System uptime and reliability directly drive customer satisfaction, revenue, and compliance. Companies that proactively invest in resilience using practices like Chaos Engineering are better positioned to meet strict regulations and outpace competitors by offering more stable, trustworthy services. Furthermore, there are often hidden costs associated with unaddressed system fragility. These extend beyond the immediate financial hit of an outage to include the gradual erosion of customer trust, increased operational overhead from constant incident response, developer burnout due to continuous firefighting, and the opportunity cost of being unable to innovate because resources are tied up in maintaining basic stability. By proactively identifying and rectifying these weaknesses, Chaos Engineering can substantially reduce these long-term, often unseen, expenses, contributing to a more sustainable and productive engineering organization.

What are the Guiding Principles of Chaos Engineering?

Chaos Engineering isn’t about randomly breaking things and seeing what happens. It’s a disciplined, scientific approach to understanding how systems behave under stress. These core principles guide how you design and run experiments, ensuring you get valuable insights instead of causing accidental damage.

-

Build a Hypothesis Around Steady State Behavior: Before introducing any disruption, you need to understand what normal looks like. Your steady state is the system’s baseline behavior, measured by key metrics like throughput, error rates, and response times. Once you’ve established this baseline, you form a hypothesis about how the system should behave when you introduce a specific fault. For example: “Even if our payment microservice fails, users can still browse products and add items to their cart.” This baseline is crucial for measuring the actual impact of your chaos experiments.

-

Mimic Real-World Problems: Your experiments should simulate actual failures that happen in production environments. This ensures your insights are practical and actionable. Real-world problems include server crashes, network latency, database slowdowns, sudden traffic spikes, or third-party API failures. The more realistic your simulations, the more valuable your learnings.

-

Test in Production (or Production-like Environments): Systems behave differently under real load with real traffic patterns. For the most accurate results, you should run experiments in production or in environments that closely mirror it. Yes, this sounds risky, but you’ll do it with strict safety controls to limit the blast radius. Start small in staging environments to build confidence, then gradually move to production with tight monitoring. The blast radius concept is critical here. By carefully controlling the scope of your experiments, you can isolate variables, observe specific impacts, and learn how your system reacts to particular faults without causing widespread damage.

-

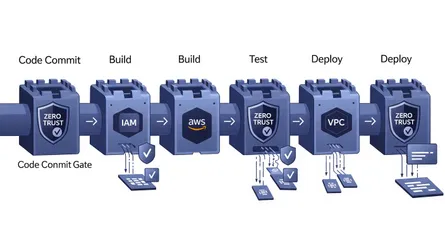

Automate Your Chaos Tests: Running experiments manually is time-consuming and doesn’t scale. Automation ensures tests run consistently and reliably. The best approach? Integrate chaos testing directly into your CI/CD pipeline. This way, you’re catching problems early during development and deployment, not after they reach production.

One thing you absolutely can’t skip: strong monitoring and observability. Without good monitoring, you can’t define your steady state, measure the impact of experiments, or detect when something goes wrong. Investing in comprehensive monitoring and logging is both a prerequisite and an ongoing requirement for successful Chaos Engineering.

There’s another benefit that’s often overlooked. Chaos Engineering makes your team operationally stronger. By regularly simulating failures, teams practice incident response, test their alerting systems, and sharpen their debugging skills. This regular exposure to stress builds confidence and creates a more mature, resilient engineering team. You’re not just finding system bugs. You’re also uncovering and fixing gaps in your operational processes and team readiness.

Chaos Monkey: The Original Primate of Production Chaos

Chaos Monkey was born out of necessity at Netflix. As they pioneered cloud-native architecture, they faced a critical challenge: keeping their streaming service available while running on thousands of cloud servers. Their solution? Build a tool that randomly shuts down instances in production. Sounds crazy, right? But this seemingly destructive action had a powerful purpose. It forced engineers to design services that could handle instance failures from day one. The tool exposed engineers to failures frequently, encouraging them to build naturally resilient services.

Chaos Monkey’s job is simple: randomly terminate virtual machine instances and containers during specific time windows. While its main action is random termination, you can customize its behavior through configuration files and integration with Spinnaker (Netflix’s continuous delivery platform). It includes an outage checker that prevents it from running during existing incidents, so it won’t make ongoing problems worse.

Here’s a basic example of a Chaos Monkey configuration:

# Chaos Monkey configuration for AWSaccounts: - name: production enabled: true # Only run during business hours (PST) schedule: enabled: true startHour: 9 endHour: 17 timezone: America/Los_Angeles

terminationStrategy: # Randomly terminate instances randomSelection: true

# Probability of termination (10% chance) probability: 0.1

# Maximum number of instances to terminate per run maxTerminationsPerDay: 5

# Exclude critical servicesexceptions: - serviceName: auth-service - serviceName: payment-processor

# Notification settingsnotifications: email: - devops@example.com slack: channel: '#chaos-engineering'Despite its historical significance, Chaos Monkey has some significant limitations. Its biggest constraint is limited attack types. It only does one thing: random instance termination. This seriously limits the kinds of failure scenarios you can simulate. The unpredictable, completely random nature means you have limited control over the blast radius. This lack of precision can cause more harm than good if your system isn’t ready.

Chaos Monkey also has major dependencies. It needs Spinnaker and MySQL for full integration. A big downside? Netflix no longer actively develops or maintains it, making it less practical for teams looking for ongoing support and new features. It also lacks built-in recovery or rollback mechanisms. Any fault tolerance or outage detection requires custom code.

Chaos Monkey works with environments that Spinnaker supports: AWS, Google Compute Engine (GCE), Azure, and Kubernetes. It’s been specifically tested with AWS, GCE, and Kubernetes. If your applications are managed through Spinnaker, you can set up Chaos Monkey to terminate instances within these cloud and container platforms.

The evolution from Chaos Monkey to modern tools shows a shift from forced resilience to controlled learning. Chaos Monkey’s original idea was revolutionary in its simplicity. By randomly terminating instances, it forced engineers to build resilience into their services. But its limitations (just one random fault type and no ongoing maintenance) show that while random disruption can uncover weaknesses, lasting resilience needs a more controlled, varied, and analytical approach. The industry has moved from a raw break it to see what happens mindset to a more mature, controlled, and data-driven experimental science.

Gremlin: The Modern Platform for Controlled Chaos

Gremlin stands out as a leading cloud-native platform built specifically to make Chaos Engineering safe, easy, and secure. Its main goal? Improve system uptime, validate reliability, and help companies build a strong reliability culture. Unlike Chaos Monkey’s random approach, Gremlin gives you precise control over fault injection.

Gremlin provides an extensive fault injection library that lets you simulate real-world failures across different system layers:

Resource Attacks: These test how your system handles resource constraints.

- CPU attacks stress test high-demand scenarios

- Memory attacks check for leaks or resource-heavy applications

- I/O attacks create read/write pressure to test storage performance

- GPU attacks stress AI, LLM, and video encoding workloads

Here’s a simple example of running a CPU attack with Gremlin:

#!/bin/bash

# Attack all cores on a specific container for 60 secondsgremlin attack-container \ --container-id abc123 \ --type cpu \ --cores 0 \ --length 60Network Attacks: These simulate network problems.

- Blackhole attacks drop all network traffic to simulate complete outages

- Latency attacks inject delays to test responsiveness under slow networks

- Packet Loss attacks drop or corrupt traffic to mimic poor network conditions

- DNS attacks block DNS access to test fallback mechanisms

Here’s how to inject network latency:

#!/bin/bash

# Add 100ms latency to all egress traffic for 2 minutesgremlin attack-container \ --container-id abc123 \ --type latency \ --delay 100 \ --length 120State Attacks: These test application and system state changes.

- Process Killer stops specific processes to simulate application crashes

- Shutdown attacks restart the host OS to test host failure recovery

- Time Travel attacks change system time to test for clock drift or certificate expiry

- Certificate checks verify certificate chains for expiration

Here’s a process killer example:

#!/bin/bash

# Kill all nginx processes and repeat every 5 seconds for 2 minutesgremlin attack-container \ --container-id abc123 \ --type process_killer \ --process nginx \ --interval 5 \ --length 120You can combine these attack types to create hundreds of pre-built and custom scenarios for very targeted, complex simulations.

Gremlin’s platform supports multi-environment deployments. It’s truly cloud-native and runs almost anywhere: all major public clouds (AWS, Azure, GCP), Linux, Windows, containerized environments like Kubernetes, and even on-premise with Gremlin Private Edition. This wide compatibility makes it versatile for companies with diverse infrastructure.

Beyond fault injection, Gremlin offers features that enhance your Chaos Engineering practice. Its GameDay Manager helps organize reliability events, cutting down prep and execution time. The platform automatically analyzes and stores experiment results, so teams can review outcomes and turn data into real improvements. It integrates with Jira for efficient action item tracking. Gremlin also provides reliability scoring and continuous risk monitoring, helping you define, measure, and track service reliability across your organization. It can automatically discover and test system dependencies, giving you deeper insights into system weaknesses.

Gremlin’s extensive feature set (from diverse fault types and multi-cloud support to GameDay management and Jira integration) shows that Chaos Engineering has matured into a complete managed service. This evolution reflects the growing complexity of modern systems and the increasing need for advanced reliability management.

What makes Gremlin particularly valuable is its focus on safety and control. By positioning itself as making Chaos Engineering safe, easy, and secure, Gremlin directly addresses common concerns about the practice. Many teams worry about causing more harm than good. By offering precise control over fault injection, automatic halt and rollback features, and a user-friendly interface, Gremlin transforms a potentially risky practice into a controlled, value-generating activity. This changes how willing businesses are to adopt these methods, especially in sensitive production environments.

Chaos Monkey vs. Gremlin: Which Tool is Right for You?

Choosing between Chaos Monkey and Gremlin depends on your company’s specific needs, budget, and chaos engineering maturity. While both tools aim to make systems more resilient, their approaches and capabilities are quite different.

Here’s a side-by-side comparison:

| Feature/Aspect | Chaos Monkey | Gremlin |

|---|---|---|

| Origin & Maintenance | Developed by Netflix, historically significant. No longer actively developed or maintained. | Commercial product, actively developed and maintained. |

| Control Over Faults | Injects faults randomly, giving a more realistic test environment for broad resilience. Limited control over blast radius and execution. | Offers precise control over fault injection, allowing targeted experiments. Provides automatic halt and rollback mechanisms. |

| Types of Faults | Primarily one attack type: random instance termination (shutdown). | Wide range of fault types: CPU, Memory, Disk, I/O, Blackhole, Latency, Packet Loss, Process Killer, Shutdown, DNS, Time Travel, Certificate Expiry, GPU. |

| Cloud/Environment Support | Tightly integrated with Netflix OSS and AWS. Works with AWS, GCE, and Kubernetes via Spinnaker. Limited multi-cloud or hybrid cloud support. | Cloud-native platform supporting all public clouds (AWS, Azure, GCP), Linux, Windows, Kubernetes, and on-premise environments. |

| Ease of Use | Easy to set up and use for its specific function. Requires Spinnaker and MySQL for full integration. | User-friendly web interface and CLI. May require more initial setup and configuration than Chaos Monkey. |

| Cost | Free and open-source. | Requires payment for advanced features and enterprise use. |

| Reporting & Analytics | No built-in detailed reporting or analysis; requires custom code for outage detection and fault tolerance. | Offers rich analytics and visualization tools, automatic analysis, and storage of results. Integrates with Jira for action item tracking. |

| Safety & Risk Mitigation | May cause system downtime and false positives. High risk if unprepared due to randomness. | Designed for safety and security. Allows starting small and scaling experiments, with features to confidently recreate incidents. |

| Additional Features | Basic functionality for instance termination. | GameDay Manager, scenario sharing, scheduled scenarios, reliability scoring, dependency discovery, failure flags, private edition. |

This table gives you a clear, easy-to-scan comparison for quickly grasping the main differences and trade-offs.

When choosing between these tools, consider your priorities. If cost is your main concern and you only need basic random instance termination, Chaos Monkey (or similar open-source tools like Pumba for Docker/Kubernetes) could be a good starting point. However, if you need precise control over fault injection, diverse fault types, full multi-cloud support, advanced features like GameDay management, and detailed analytics, Gremlin is the stronger choice for enterprise-level reliability efforts.

Ultimately, your decision should factor in your team’s chaos engineering maturity, available budget, and the complexity of the systems you’re testing. The clear difference between Chaos Monkey and Gremlin reflects how Chaos Engineering has become a commercial and professional discipline. What started as an internal Netflix experiment has grown into a dedicated industry with advanced platforms, showing the growing recognition that reliability is a core business function.

How Do We Inject Chaos in AWS Environments?

In AWS environments, Chaos Engineering follows a structured framework designed to find resilience gaps in your workloads. It’s not about randomly breaking production systems. It’s a valuable tool for understanding how your workloads behave under simulated failure conditions.

The most common approach uses the AWS Fault Injection Simulator (FIS), a managed service built specifically for running chaos engineering experiments on AWS services. Here’s the typical workflow:

-

Define Steady State: First, define the normal operating condition for your systems. This baseline lets you measure what happens when you inject chaos. Collect and analyze data during stable conditions to set performance baselines and identify normal behavior patterns.

-

Design Chaos Tests: Plan controlled chaos experiments to simulate different failure scenarios within that steady state. Identify specific components or services to target and determine the experiment’s scope and severity. AWS FIS is perfect for this.

-

Execute Experiments: Set up the necessary infrastructure for running tests, including test environments, monitoring, and logging systems. Then run your defined experiments.

-

Analyze and Fix: During experiments, continuously monitor system behavior. Collect and analyze data to assess performance, stability, and resilience impacts, comparing against your baselines.

-

Iterate and Improve: Repeat these steps periodically to ensure your system remains resilient over time.

Here’s a practical example using AWS FIS to terminate EC2 instances:

{ "description": "Test application resilience by terminating EC2 instances", "targets": { "ec2-instances": { "resourceType": "aws:ec2:instance", "resourceTags": { "Environment": "staging", "ChaosReady": "true" }, "selectionMode": "COUNT(2)" } }, "actions": { "terminate-instances": { "actionId": "aws:ec2:terminate-instances", "parameters": {}, "targets": { "Instances": "ec2-instances" } } }, "stopConditions": [ { "source": "aws:cloudwatch:alarm", "value": "arn:aws:cloudwatch:us-east-1:123456789012:alarm:HighErrorRate" } ], "roleArn": "arn:aws:iam::123456789012:role/FISExperimentRole", "tags": { "Name": "EC2-Termination-Test", "Team": "Platform" }}To run this experiment:

#!/bin/bash

# Create the experiment templateTEMPLATE_ID=$(aws fis create-experiment-template \ --cli-input-json file://aws-fis-ec2-termination.json \ --query 'experimentTemplate.id' \ --output text)

echo "Created experiment template: $TEMPLATE_ID"

# Start the experimentEXPERIMENT_ID=$(aws fis start-experiment \ --experiment-template-id "$TEMPLATE_ID" \ --query 'experiment.id' \ --output text)

echo "Started experiment: $EXPERIMENT_ID"

# Monitor experiment statusaws fis get-experiment \ --id "$EXPERIMENT_ID" \ --query 'experiment.state.status' \ --output textExamples of Chaos Experiments in AWS Services:

- Amazon Aurora: Simulate network latency between Aurora instances, introduce failures in replica instances, or test increased load on read/write capacity.

- Amazon Kinesis: Simulate higher data ingestion rates to test stream scaling.

- Amazon EC2: Test Spot Instance interruptions to verify application handling of sudden terminations.

- Amazon DynamoDB: Deny traffic to/from regional endpoints to test failover mechanisms.

A unique challenge arises with serverless environments like AWS Lambda. You don’t control or access the underlying infrastructure, making traditional fault injection difficult. Here are two approaches:

1. Using a Library in Lambda Code:

You can inject faults directly within the Lambda function using a library:

import {DynamoDBClient, PutItemCommand} from '@aws-sdk/client-dynamodb';import {S3Client, GetObjectCommand} from '@aws-sdk/client-s3';

// Initialize AWS SDK v3 clientsconst dynamoClient = new DynamoDBClient({region: process.env.AWS_REGION});const s3Client = new S3Client({region: process.env.AWS_REGION});

/** * Chaos injection library for Lambda functions * Supports error injection, latency injection, and custom failure scenarios */class ChaosInjector { constructor(config = {}) { this.errorRate = parseFloat(config.errorRate) || 0; this.latencyMs = parseInt(config.latencyMs, 10) || 0; this.failureTypes = config.failureTypes || ['generic_error']; this.enabled = config.enabled !== false; }

/** * Inject artificial latency */ async injectLatency() { if (this.latencyMs > 0) { console.log(`[Chaos] Injecting ${this.latencyMs}ms latency`); await new Promise((resolve) => setTimeout(resolve, this.latencyMs)); } }

/** * Inject random errors based on error rate */ async injectError() { if (Math.random() < this.errorRate) { const failureType = this.failureTypes[Math.floor(Math.random() * this.failureTypes.length)];

console.error(`[Chaos] Injecting failure: ${failureType}`);

switch (failureType) { case 'timeout_error': throw new Error('Chaos: Simulated timeout error'); case 'throttle_error': const error = new Error('Chaos: Simulated throttling'); error.code = 'ThrottlingException'; throw error; case 'service_unavailable': const unavailableError = new Error('Chaos: Service unavailable'); unavailableError.statusCode = 503; throw unavailableError; default: throw new Error('Chaos: Simulated generic failure'); } } }

/** * Execute chaos injection */ async inject() { if (!this.enabled) { return; }

await this.injectLatency(); await this.injectError(); }}

// Initialize chaos injector from environment variablesconst chaos = new ChaosInjector({ errorRate: process.env.CHAOS_ERROR_RATE || 0, latencyMs: process.env.CHAOS_LATENCY_MS || 0, failureTypes: process.env.CHAOS_FAILURE_TYPES?.split(',') || [ 'generic_error', ], enabled: process.env.CHAOS_ENABLED !== 'false',});

/** * Lambda handler with chaos engineering */export const handler = async (event, context) => { console.log('Processing request:', {requestId: context.requestId});

try { // Inject chaos before processing await chaos.inject();

// Your actual business logic const result = await processRequest(event);

return { statusCode: 200, headers: { 'Content-Type': 'application/json', 'X-Request-Id': context.requestId, }, body: JSON.stringify(result), }; } catch (error) { console.error('Error processing request:', { error: error.message, stack: error.stack, requestId: context.requestId, });

return { statusCode: error.statusCode || 500, headers: { 'Content-Type': 'application/json', 'X-Request-Id': context.requestId, }, body: JSON.stringify({ error: error.message, requestId: context.requestId, }), }; }};

/** * Example business logic using AWS SDK v3 */async function processRequest(event) { const {userId, action} = JSON.parse(event.body || '{}');

// Example: Write to DynamoDB using AWS SDK v3 if (action === 'save') { const command = new PutItemCommand({ TableName: process.env.TABLE_NAME, Item: { userId: {S: userId}, timestamp: {N: Date.now().toString()}, data: {S: JSON.stringify(event.body)}, }, });

await dynamoClient.send(command); }

// Example: Read from S3 using AWS SDK v3 if (action === 'fetch') { const command = new GetObjectCommand({ Bucket: process.env.BUCKET_NAME, Key: `users/${userId}/data.json`, });

const response = await s3Client.send(command); const data = await response.Body.transformToString(); return {data: JSON.parse(data)}; }

return {message: 'Success', userId, action};}2. Using a Lambda Extension:

You can deploy a Lambda layer that injects failures without changing your main function code:

#!/usr/bin/env python3"""Lambda Extension for Chaos Engineering

This extension intercepts Lambda invocations and injects controlled failuresto test system resilience without modifying application code."""

import osimport sysimport jsonimport randomimport timeimport signalimport requestsfrom pathlib import Pathfrom typing import Dict, List, Optional, Tuplefrom datetime import datetime

# Lambda Extensions API endpointEXTENSION_API = f"http://{os.getenv('AWS_LAMBDA_RUNTIME_API')}/2020-01-01/extension"

class ChaosExtension: """Chaos injection engine for Lambda functions"""

def __init__(self): self.extension_id: Optional[str] = None self.error_rate = float(os.getenv('CHAOS_ERROR_RATE', '0.0')) self.latency_ms = int(os.getenv('CHAOS_LATENCY_MS', '0')) self.max_latency_ms = int(os.getenv('CHAOS_MAX_LATENCY_MS', '5000')) self.enabled = os.getenv('CHAOS_ENABLED', 'true').lower() == 'true'

try: self.failure_types = json.loads( os.getenv('CHAOS_FAILURE_TYPES', '["http_error"]') ) except json.JSONDecodeError: self.failure_types = ['http_error'] print('[chaos-extension] Warning: Invalid CHAOS_FAILURE_TYPES, using default')

# Validate configuration self._validate_config()

def _validate_config(self) -> None: """Validate chaos configuration parameters""" if not 0 <= self.error_rate <= 1: print(f'[chaos-extension] Warning: Invalid error_rate {self.error_rate}, clamping to [0,1]') self.error_rate = max(0, min(1, self.error_rate))

if self.latency_ms < 0: print(f'[chaos-extension] Warning: Negative latency {self.latency_ms}ms, setting to 0') self.latency_ms = 0

if self.latency_ms > self.max_latency_ms: print(f'[chaos-extension] Warning: Latency {self.latency_ms}ms exceeds max {self.max_latency_ms}ms') self.latency_ms = self.max_latency_ms

def register(self) -> str: """Register extension with Lambda Extensions API""" try: response = requests.post( f'{EXTENSION_API}/register', json={'events': ['INVOKE', 'SHUTDOWN']}, headers={'Lambda-Extension-Name': 'chaos-extension'}, timeout=5 ) response.raise_for_status() self.extension_id = response.headers['Lambda-Extension-Identifier'] print(f'[chaos-extension] Registered with ID: {self.extension_id}') return self.extension_id except Exception as e: print(f'[chaos-extension] Failed to register: {e}') sys.exit(1)

def next_event(self) -> Dict: """Wait for next Lambda event""" try: response = requests.get( f'{EXTENSION_API}/event/next', headers={'Lambda-Extension-Identifier': self.extension_id}, timeout=None ) response.raise_for_status() return response.json() except Exception as e: print(f'[chaos-extension] Error getting next event: {e}') sys.exit(1)

def should_inject_chaos(self) -> bool: """Determine if chaos should be injected for this invocation""" if not self.enabled: return False return random.random() < self.error_rate

def inject_latency(self) -> None: """Inject artificial latency""" if self.latency_ms > 0: actual_latency = random.randint( self.latency_ms // 2, self.latency_ms ) print(f'[chaos-extension] Injecting {actual_latency}ms latency') time.sleep(actual_latency / 1000.0)

def inject_error(self) -> Optional[Tuple[int, Dict]]: """Inject simulated error based on failure type""" if not self.should_inject_chaos(): return None

failure_type = random.choice(self.failure_types) timestamp = datetime.utcnow().isoformat()

print(f'[chaos-extension] Injecting failure: {failure_type} at {timestamp}')

error_scenarios = { 'http_error': (500, { 'error': 'Chaos: Simulated HTTP 500 Internal Server Error', 'type': 'InternalServerError', 'timestamp': timestamp }), 'timeout': (408, { 'error': 'Chaos: Simulated request timeout', 'type': 'RequestTimeout', 'timestamp': timestamp }), 'throttle': (429, { 'error': 'Chaos: Simulated throttling', 'type': 'ThrottlingException', 'timestamp': timestamp }), 'service_unavailable': (503, { 'error': 'Chaos: Service temporarily unavailable', 'type': 'ServiceUnavailable', 'timestamp': timestamp }), 'bad_gateway': (502, { 'error': 'Chaos: Bad gateway response', 'type': 'BadGateway', 'timestamp': timestamp }) }

if failure_type == 'timeout': # Simulate timeout with actual delay timeout_duration = random.randint(1, 5) print(f'[chaos-extension] Simulating {timeout_duration}s timeout') time.sleep(timeout_duration)

return error_scenarios.get( failure_type, (500, {'error': 'Chaos: Unknown failure type', 'timestamp': timestamp}) )

def process_invoke(self, event: Dict) -> None: """Process INVOKE event""" request_id = event.get('requestId', 'unknown') print(f'[chaos-extension] Processing invocation: {request_id}')

# Inject latency before function execution self.inject_latency()

# Check if error should be injected error = self.inject_error() if error: status_code, error_body = error print(f'[chaos-extension] Chaos injected: {status_code} - {error_body["error"]}')

def run(self) -> None: """Main extension loop""" print('[chaos-extension] Starting chaos extension') print(f'[chaos-extension] Configuration:') print(f' - Enabled: {self.enabled}') print(f' - Error rate: {self.error_rate * 100:.1f}%') print(f' - Latency: {self.latency_ms}ms (max: {self.max_latency_ms}ms)') print(f' - Failure types: {self.failure_types}')

# Register extension self.register()

# Main event loop while True: event = self.next_event() event_type = event.get('eventType')

if event_type == 'INVOKE': self.process_invoke(event) elif event_type == 'SHUTDOWN': print('[chaos-extension] Shutdown event received') break else: print(f'[chaos-extension] Unknown event type: {event_type}')

def signal_handler(signum, frame): """Handle shutdown signals gracefully""" print(f'[chaos-extension] Received signal {signum}, shutting down') sys.exit(0)

if __name__ == '__main__': # Register signal handlers signal.signal(signal.SIGTERM, signal_handler) signal.signal(signal.SIGINT, signal_handler)

try: chaos = ChaosExtension() chaos.run() except Exception as e: print(f'[chaos-extension] Fatal error: {e}') sys.exit(1)References

- What is Chaos Engineering? | OpenText, accessed on June 6, 2025, https://www.opentext.com/what-is/chaos-engineering

- Chaos Engineering Tutorial: Comprehensive Guide With Best Practices - LambdaTest, accessed on June 6, 2025, https://www.lambdatest.com/learning-hub/chaos-engineering-tutorial

- Home - Chaos Monkey, accessed on June 6, 2025, https://netflix.github.io/chaosmonkey/

- Netflix/chaosmonkey: Chaos Monkey is a resiliency tool … - GitHub, accessed on June 6, 2025, https://github.com/Netflix/chaosmonkey

- Chaos Engineering | Gremlin, accessed on June 6, 2025, https://www.gremlin.com/product/chaos-engineering

- Comparing Chaos Engineering tools - Gremlin, accessed on June 6, 2025, https://www.gremlin.com/community/tutorials/chaos-engineering-tools-comparison

- Chaos Monkey vs Gremlin vs Pumba - Bwiza Charlotte, accessed on June 6, 2025, https://bwiza.hashnode.dev/chaos-monkey-vs-gremlin-vs-pumba

- AWS chaos engineering tools: PwC, accessed on June 6, 2025, https://www.pwc.com/us/en/technology/alliances/library/aws-chaos-engineering.html

- Introduction to Chaos Engineering in Serverless Architectures - Ran The Builder, accessed on June 6, 2025, https://www.ranthebuilder.cloud/post/introduction-to-chaos-engineering-serverless

- information system resilience - Glossary - NIST CSRC, accessed on June 6, 2025, https://csrc.nist.gov/glossary/term/information_system_resilience

- Chaos Engineering Explained: Core Principles and … - Distant Job, accessed on June 6, 2025, https://distantjob.com/blog/chaos-engineering/

- Types of chaos experiments - Fork My Brain, accessed on June 6, 2025, https://notes.nicolevanderhoeven.com/Types+of+chaos+experiments

- Gremlin vs Harness CE: Chaos Engineering Comparison, accessed on June 6, 2025, https://www.harness.io/comparison-guide/gremlin-vs-harness

- I’m Kolton Andrus, Ask Me Anything about Chaos Engineering - Atlassian Community, accessed on June 6, 2025, https://community.atlassian.com/forums/Jira-questions/I-m-Kolton-Andrus-Ask-Me-Anything-about-Chaos-Engineering/qaq-p/1342117

- Chaos Engineering with Chaos Mesh and vCluster: Testing Close to …, accessed on June 6, 2025, https://www.loft.sh/blog/chaos-mesh-with-vcluster